DiagnosticInformatics ApproachesInnovationPartnership AI-assisted early detection of breast cancer

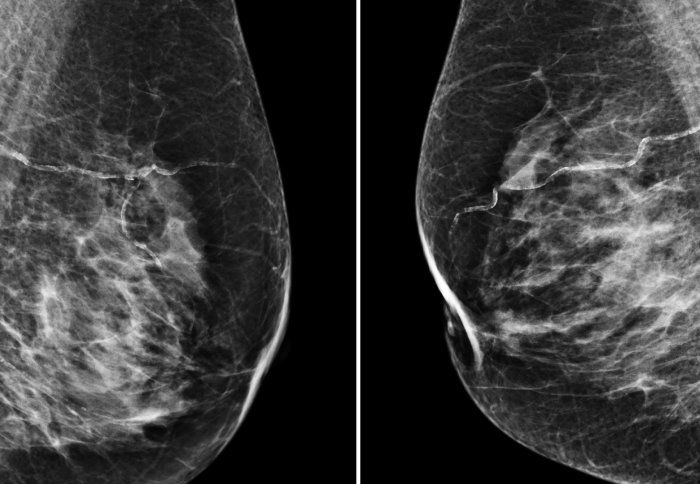

A computer algorithm has been shown to be as effective as human radiologists in spotting breast cancer from x-ray images.

The international team behind the study, which includes researchers from Google Health, DeepMind, Imperial College London, the NHS and Northwestern University in the US, designed and trained an artificial intelligence (AI) model on mammography images from almost 29,000 women.

The findings, published in Nature, show the AI was able to correctly identify cancers from the images with a similar degree of accuracy to expert radiologists, and holds the potential to assist clinical staff in practice.

When tested on a large UK dataset as part of the Cancer Research UK-funded OPTIMAM project and a smaller US dataset from Northwestern University, the AI also reduced the proportion of screening errors – where cancer was either incorrectly identified or where it may have been missed.

According to the researchers, the work demonstrates how the AI could potentially be applied in clinical settings around the world. The team highlights that such AI tools could support clinical decision-making in the future as well as alleviate the pressure on healthcare systems internationally by supporting the workload of clinical reviewers.

Professor the Lord Ara Darzi of Denham, one of the authors of the paper and BRC Surgery and Surgical Technology Theme Lead, said: “Screening programmes remain one of the best tools at our disposal for catching cancer early and improving outcomes for patients, but many challenges remain – not least the current volume of images radiologists must review.

“While these findings are not directly from the clinic, they are very encouraging, and they offer clear insights into how this valuable technology could be used in real life.

“There will of course a number of challenges to address before AI could be implemented in mammography screening programmes around the world, but the potential for improving healthcare and helping patients is enormous.”

In the UK, it’s estimated that one in eight women will be diagnosed with breast cancer in their lifetime, with the risk increasing with age. Early detection and treatment provide the best outcome for women, but accurately detecting and diagnosing breast cancer remains a significant challenge.

Women aged between 50 and 71 are invited to receive a mammogram on the NHS every three years, where an x-ray of the breast tissue is used to look for abnormal growths or changes which may be cancerous. While screening is highly effective and the majority of cancers are picked up during the process, even with significant clinical expertise human interpretation of the x-rays is open to errors.

In the latest study, researchers at Google Health trained an AI model on depersonalised patient data – using mammograms from women in the UK and US where any information that could be used to identify them was removed.

The AI model reviewed tens of thousands of images, which had been previously interpreted by expert radiologists. But while the human experts had access to the patient’s history when interpreting scans, the AI had only the most recent mammogram to go on.

During the evaluation, the researchers found their AI model could predict breast cancer from scans with a similar level of accuracy overall to expert radiographers (or were shown to be ‘non-inferior’). Compared to human interpretation, the AI showed an absolute reduction in the proportion of cases where cancer was incorrectly identified (5.7%/1.2% in the UK and US data respectively), as well as cases where cancer was missed (9.4%/2.7% in UK/US data).

Beyond the AI model’s potential to support and improve clinical decision-making, the researchers also looked to see if their model could improve reader efficiency. While the AI did not surpass the double-reader benchmark, statistically it performed no worse than the second reader.

In a small secondary analysis, they simulated the AI’s role in the double-reading process – used by the NHS. In this process, scans are interpreted by two separate radiologists, each of whom would review the scan and recommend a follow up or no action. Any positive finding is referred for biopsy and in cases where the two readers disagree, the case goes to a third clinical reviewer for decision.

The simulation compared the AI’s decision with that of the first reader. Scans were only sent to a second reviewer if there was a disagreement between the first reader and the AI. The findings showed that using the AI in this way could reduce the workload of the second reviewer by as much as 88%, which could ultimately help to triage patients in a shorter timeframe.

According to the team, the findings are exciting and show how AI could assist healthcare screening services around the world. One such practical application could include providing automatic real-time feedback on mammography images, awarding a statistical score which could be used to triage suspected cases more quickly.

However, the researchers add that further testing in larger populations is required.

This story was written by Ryan O’Hare and is © Imperial College London.